Let’s assume it worked and move on to the next step. If the answers match, this part of migration is finished. SELECT COUNT(*)įROM SNOWFLAKE_SAMPLE_DATA.TPCH_SF100.ORDERS (Pro tip: it’s not fast.) The following commands should both print the same result. It’s a nice feature to check that all the files were written properly. Snowflake can select data from a stage as well as a table.

This ensures that the Parquet files will preserve the original column names.

We will also show steps to optimize the table after loading. Along the way we’ll introduce some simple automation to generate SQL to create ClickHouse schema and load data. The rest of this article shows the data migration process for a Snowflake sample table containing 150M rows.

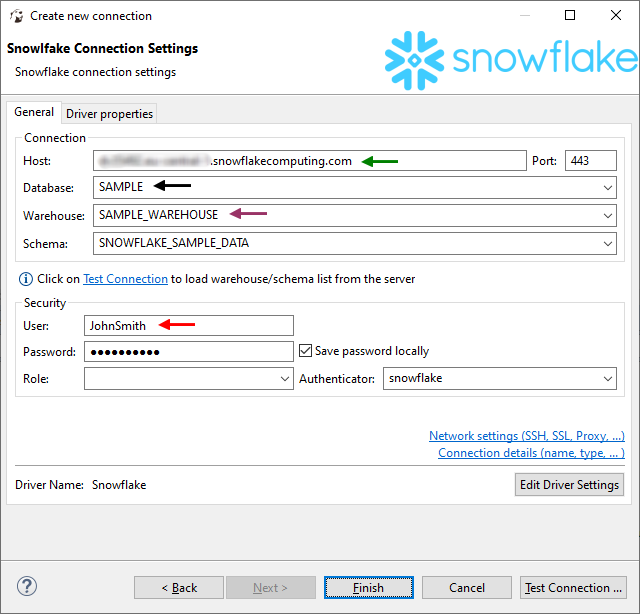

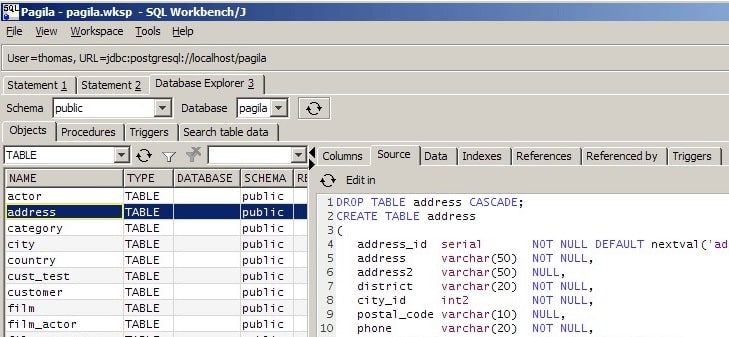

The procedure can move data temporarily for testing or for a permanent migration. There are many ways, but we’ll focus on an easy method that allows you to migrate large tables efficiently using S3 object storage and Parquet files, a popular storage format for columnar data. So perhaps you are wondering how your Snowflake application would run on ClickHouse?įortunately it is not hard to load data into ClickHouse and find out. That last benefit includes costs that are both low and predictable, important properties in uncertain economic times. ClickHouse offers consistent low latency response, freedom to run anywhere, and outstanding cost efficiency. That said, ClickHouse is catching up rapidly and is now the leading open source competitor. Note, that after you enter your account information, you can click the Test Connection and it will populate the Database, Warehouse, and Schema dropdowns allowing you to select from there.Snowflake is now the most popular SQL data warehouse according to the influential DB Engines site. Launch DBeaver and connect to your Snowflake Data Warehouse by filling in required information with your Snowflake account information. create schema ERD use schema ERD create table parent ( col1 integer not null, col2 integer not null, constraint parentkey_1 primary key (col1, col2) not enforced ) create table child1 ( col_a integer not null, col_b integer not null, constraint childkey_1 foreign key (col_a, col_b) references parent (col1, col2) not enforced ) create table child2 ( col_a integer not null, col_b integer not null, constraint childkey_2 foreign key (col_a, col_b) references parent (col1, col2) not enforced ) A parent table with a primary key constraint and two additional tables with foreign key constraints that point to the same columns as the parent table’s primary key constraint.

0 kommentar(er)

0 kommentar(er)